Research

This page is for research-related stuff from 2012 forward. We will add things to this page as they become available (as promptly as possible). My research generally falls into two broad categories: 1) Robots and 2) Healthcare AI. This page is also for sharing various resources we've created to perform this work (programming code, 3D printing designs, surveys, etc.), but if there is something not posted here you are interested in, just email me.

I. Robot Stuff

Conversational Robots

We are currently working on building conversational robots for Dementia and Alzheimer's. More broadly, the goal is to use conversational speech systems to help people as they get older (aging-related issues). We do this by fine-tuning specialized large language models (LLMs) that can detect "speech biomarkers" of cognition, which can then be used to track cognitive impairment over time while also addressing difficulties older adults have when interacting with artificial speech systems onboard robots, chatbots, virtual avatars, etc. Those difficulties stem from the fact that older adults often have changed speech patterns related to anomia, prosody changes, slurred pronunciation, disrupted turn-taking, etc., which create communication challenges. Interestingly the "speech biomarkers" related to those difficulties often show up as the first symptom of cognitive impairment, years before any formal diagnosis or any other symptom.

The same speech system can also be used with younger adults, e.g. those with Autism or social anxiety issues. The overall dream is to build AI that can communicate with anyone, regardless of their life stage or situation, to help those individuals as well as their family members.

- Speech System Architecture description - main paper describing the architecture of the Dementia Speech System for use onboard conversational robots for dementia, including calculating the "speech biomarkers" in real-time

- Indiana Dataset (speech data) - Contains audio-visual recordings of 27 people living with dementia (PLwD) having conversations with a robot. This is sensitive data, not publicly available. However, if interested in it, please contact us

Robotic Pets - Project Page

We are currently working on research projects combining socially assistive robots (SARs) with in-home sensor networks (i.e. smart homes, IOT). The goal is to see whether we can improve the mental and physical functioning of elderly people suffering from dementia, depression, and other illnesses who are still independently living in their own homes. Over time, we have expanded this research to broader groups, such as children and young adults. The aim here is to see whether we can utilize such social robots to help keep people living in their own homes longer and as a tool to monitor people's everyday health beyond the clinic walls.

Our recent work has been on developing AI models (machine learning, deep learning) of the robotic sensor data, including developing novel modeling methods for such data using few-shot learning, generative replay models, transformer models, and transfer learning. Other output of this research has been novel robotic sensor devices, datasets, and specialized instruments for understanding people's everyday health. Links to a few of those resources are below:

- Robotic Pet Interaction Dataset - contains over 300 million rows of data about real-world human-robot interactions from 26 participants in South Korea and the US, collected in their own homes (approx. 173 hours of real-world interaction data)

- SoREMA Instrument - survey for conducting EMA research with social robots

Social AI & Robotic Personalities

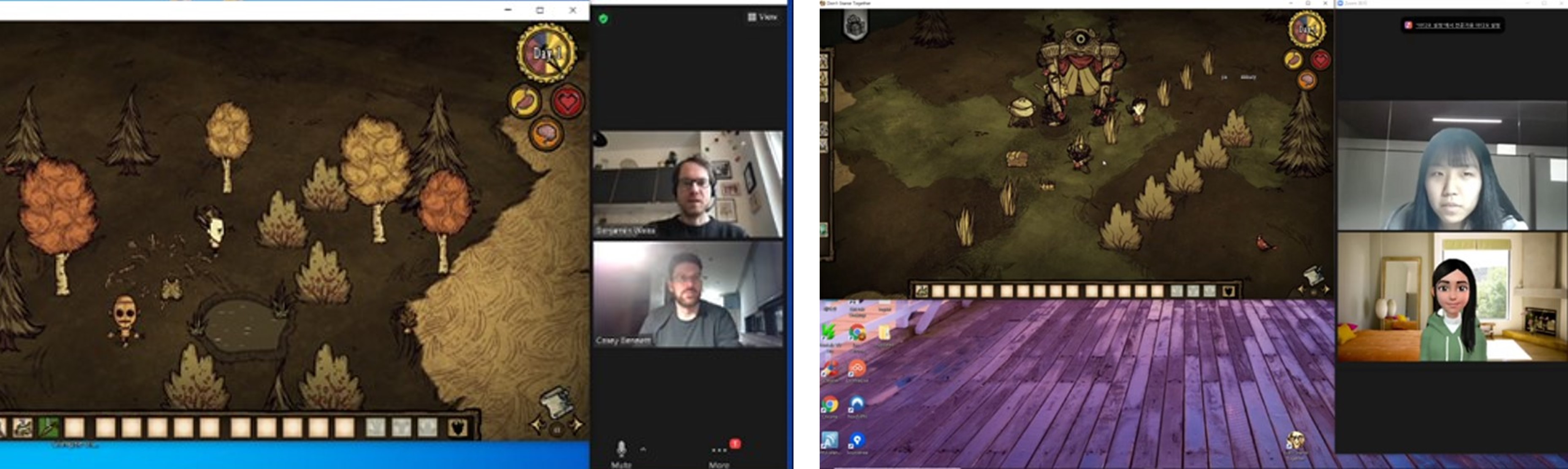

We are currently working to create "Social AI" for robots and virtual avatars which can operate autonomously in social scenarios, such as cooperative game paradigms (e.g. video games). The idea is to use those cooperative game paradigms to try to reverse engineer social cognition by attempting to "break" normal social interactions. The research incorporate various modalities such as speech, gesture, and facial expressions. This also relates to research we've done evaluating how we can create emergent robotic personalities from very basic building blocks without explicit programming, by combining data from real-world physical human-robot interactions with simulations.

More recently, we have been developing novel Speech Dialogue systems for this, based on multi-modal transformer models, NLP, and deep learning. That system is bilingual (English, Korean, Japanese), allowing us to study how humans speaking different languages interact differently with artificial agents. It also allows us to explore social cognition in individuals with "changed speech" patterns, such as children or people with dementia or multiple sclerosis.

Speech Dialogue System - For robots involved in cooperative game-playing primarily. We have not yet publicly released the speech system, but if you are interested in it please contact us

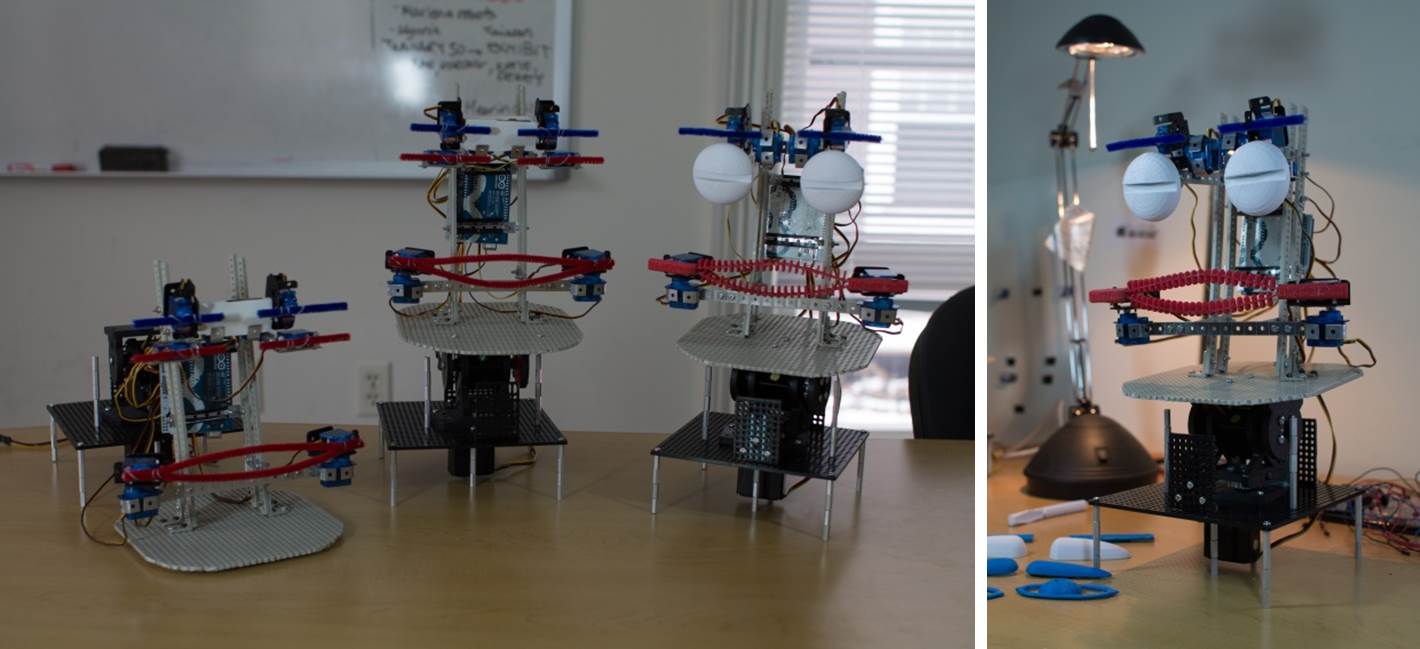

MiRAE (Robot Face) - Project Page

The goal of this project is to explore minimalistic features necessary for a robotic face to engage in meaningful affective social interaction, as well to study questions related to the temporal dynamics of social cognition in humans and human-robot interaction. The broader aim is to create an inexpensive and replicable robotic face that can help further the "science" of robots, and/or just to make such robotic platforms more accessible to the public in general. We are making the following resources are available for research/scientific and academic use. We would appreciate if you would cite the following if you use any of these resources: Casey C. Bennett and Selma Sabanovic (2014) "Deriving Minimal Features for Human-Like Expressions Robotic Faces." International Journal of Social Robotics. 6(3): 367-381.

MiRAE Construction Manual - Construction manual for building a robotic face from scratch out of easily accessible components

RobotFace Library - Programming code (C++/Arduino Library) for controlling a robot face, making expressions, etc.

FEI instrument (English version, Japanese version) - Facial Expression Identification instrument for robot face experiments

3D Printing Schematics - for printing out a fully 3D-printed robot face/head and (will be releasing Summer 2014)

Interactive 3D Model of MiRAE - via our talented colleagues Chris Myles and Ray Chen (note: takes a minute or two to load)

Design notebooks - this is a sample of design sketchbooks/notes about the process (we have hundreds of these)

TestServoPos code - Arduino sketch for determining 90 degree position of any servo

MiRAE museum exhibit - one of the interactive robotic faces is currently on display as part of a public art installation (exhibit booklet, pg.8)

II. Healthcare/AI Stuff

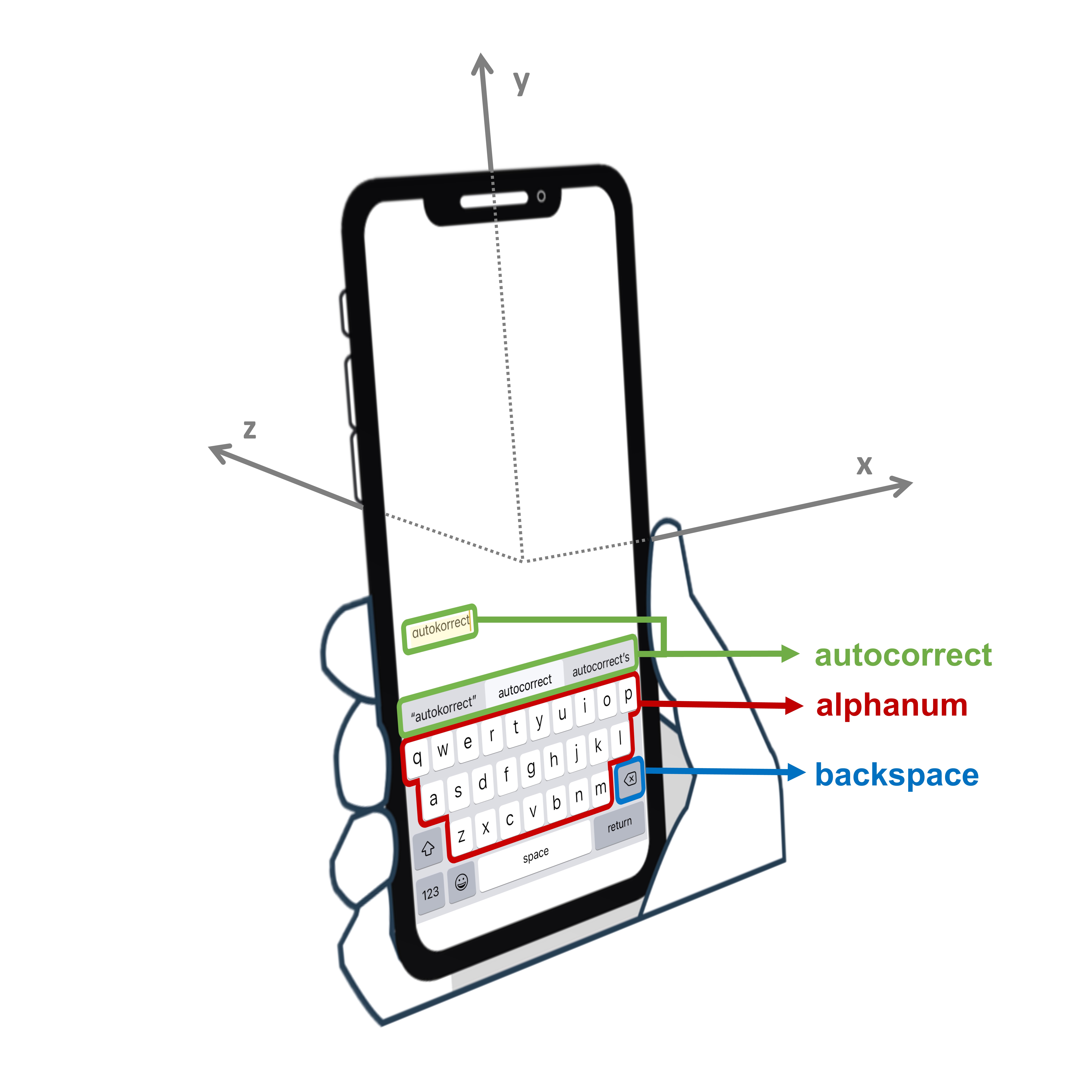

BiAffect Project - Project Page

This project focuses on using passive interaction data from smartphones (e.g. keyboard typing dynamics) to track and predict health status in patients with depression and Bipolar disorder. The idea is to monitor people's everyday health by taking advantage of technology they are already using anyway, rather than wait for them to show up at the doctor's office. This is a partnership with researchers at several major universities around the world. Our role in the project is mostly focused on the machine learning aspects of how to model the data in a way that is applicable to real-world clinical care.

Wrote a blog post for the journal Nature explaining the concept plainly, check it out: https://go.nature.com/3hCUqp0

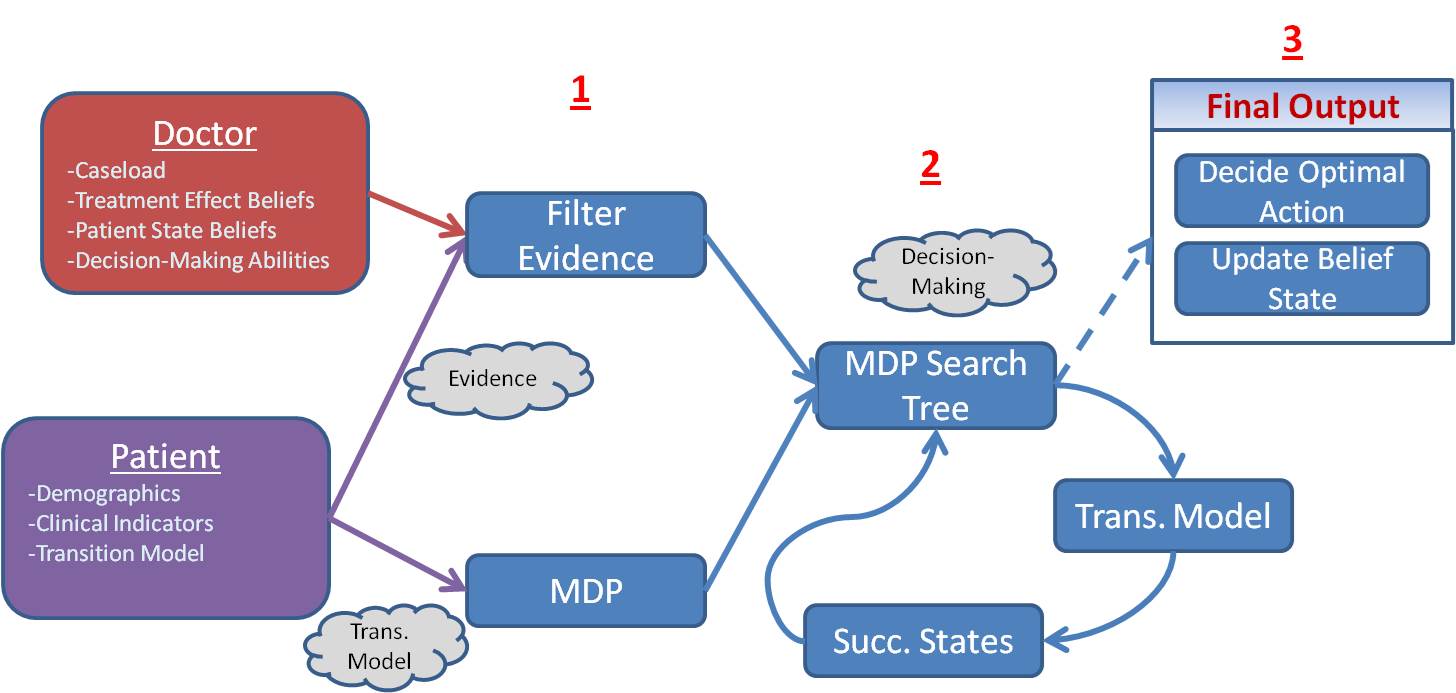

AI Framework for Simulating Clinical Decision Making

This project aimed to develop a clinical AI that could "think like a doctor," in order to better assist clinicians and patients in challenging healthcare decisions. This research was originally associated with this paper: Bennett & Hauser (2013) "Artificial Intelligence Framework for Simulating Clinical Decision-Making: A Markov Decision Process Approach.” Artificial Intelligence in Medicine. 57(1): 9-19. We may release a scaled-down open-source version of that code for academic and research use. If you have questions, please email me.

RxNorm

This project focused on utilizing and adapting RxNorm to develop smarter ways to collect patient medication history in real-world electronic health records. The main associated paper with this is Bennett (2012) “Utilizing RxNorm to support practical computing applications: Capturing medication history in live electronic health records.” Journal of Biomedical Informatics. 45(4): 634-641. We released code for adapting and implementing RxNorm into any electronic health record. You can find it below:

- RxNorm Transform Code - Code for extracting and reorganizing RxNorm data so it can be used to support dynamic medication history data entry and search